· Antonio Nocerino · AI & Machine Learning, Software Development, Technologies & Tools

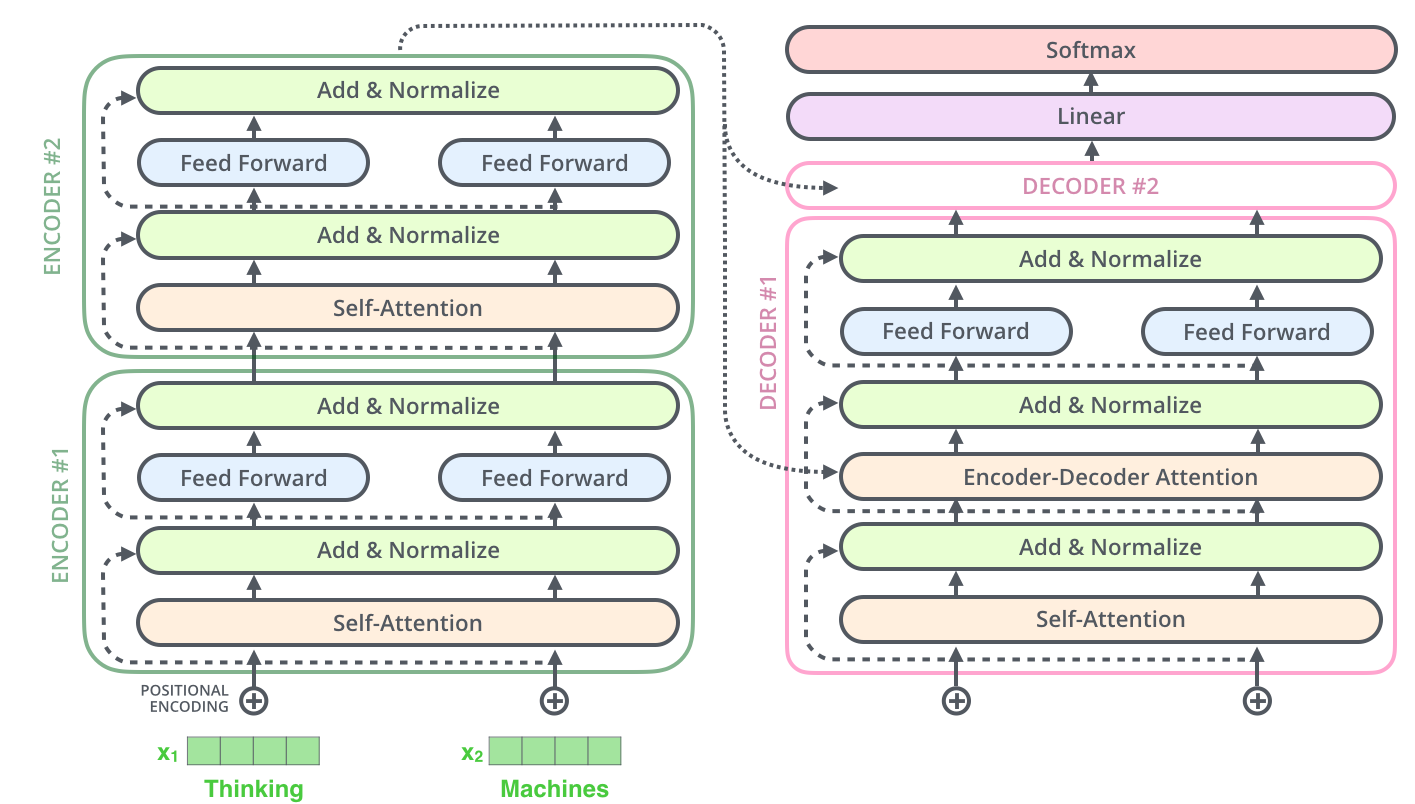

"Attention Is All You Need": Revolutionizing AI with the Transformer Architecture

This article examines the "Attention Is All You Need" paper, highlighting how the Transformer architecture, via attention and parallel processing, revolutionized NLP and became the basis for LLMs